In recent years, the realm of artificial intelligence (AI) has witnessed a seismic shift with the rise of large language models(LLMs). These models, built upon sophisticated neural networks and trained on colossal datasets, have ushered in a new era of human-computer interaction and information processing. Their impact spans across a myriad of fields, redefining the way we generate, understand, and interact with language. At their core, large language models are large transformer models trained on diverse textual sources, ranging from books and articles to online conversations, allowing them to learn the intricacies of language structure, semantics, and even cultural nuances. The result is a text generator that can engage in conversations, draft content, translate languages, and perform an array of tasks that were once the exclusive domain of human communication.

A notable trend has emerged where large language models (LLMs) are initially trained by academic institutions and major tech companies like OpenAI, Microsoft, and NVIDIA. Subsequently, many of these models become accessible for public utilization. This approach marks a significant stride in the widespread adoption of AI, as it offers a convenient and efficient method. Instead of investing substantial resources in training models from scratch with general language knowledge, businesses can now concentrate their efforts on refining existing LLMs to cater to specific tasks. However, this plug-and-play approach introduces its own set of challenges. Selecting the most suitable LLM for a given task and understanding how to tailor it to fit the task's requirements can be quite intricate. This summer, I set out to explore adapting LLMs for a specific task, focusing particularly on clinical question answering. My work involved experimenting with various LLMs and techniques such as Prompt Engineering and Fine-tuning. In the upcoming sections, I will delve into the details of my experimentation, sharing detailed results and findings.

Prompt Engineering with OpenAI's ChatGPT

ChatGPT caused quite a sensation when it was launched in 2022. Its remarkable capability to engage in conversations with actual humans and provide intriguing answers set it apart as a distinctive breakthrough in the realm of Generative AI. It is built upon OpenAI's GPT-3.5 and GPT-4, fine-tuned for conversational applications. Premium users can get access to GPT-4 based version. OpenAI's models have established themselves as the benchmark in the realm of large language models. Their user-friendly API simplifies the process significantly. As the models operate on OpenAI's servers, the need for individual computing resources is negated, resulting in a virtually effortless initiation process. This makes it an attractive option to use for specific tasks.

Prompt engineering entails the deliberate construction of input prompts to guide language models toward desired outputs. This technique serves as a potent tool for enhancing the specificity and relevance of responses generated by language models, thereby tailoring their outputs to meet particular contextual or task-oriented requirements. I experimented with the ClinicalQA dataset and various prompt patterns to see how ChatGPT performs. The dataset consists of open-ended clinical questions spanning several medical specialties, with topics ranging from treatment guideline recommendations to clinical calculations. These questions are sourced from board-certified physicians tasked with generating questions related to their day-to-day clinical practices.

Prompt patterns

- Persona Pattern The persona pattern involves imbuing the language model with a predefined personality. This technique infuses the model with consistent traits, preferences, and characteristics, effectively molding it into a virtual entity with a unique persona. By providing the model with a distinct personality, the persona pattern facilitates more engaging and contextually relevant conversations.

- Audience Persona pattern In the audience persona pattern, the focus shifts from imbuing the model with a single persona to tailoring the model's responses to suit a specific target audience. This technique involves customizing the language and style of communication to match the preferences, demographics, or characteristics of a particular group of users. By adapting the model's output to resonate with the intended audience, the audience persona pattern enhances communication effectiveness and ensures that the model's responses are relatable and well-received.

Template: Act as persona X. Perform Y

Template: Explain X to me. Assume that I am Persona Y

Examples

Here is an example from the dataset.

I tried out the following prompts for this question. The first input asks the question as is. The next three inputs are the persona pattern, the audience persona pattern, and a combination of the two.

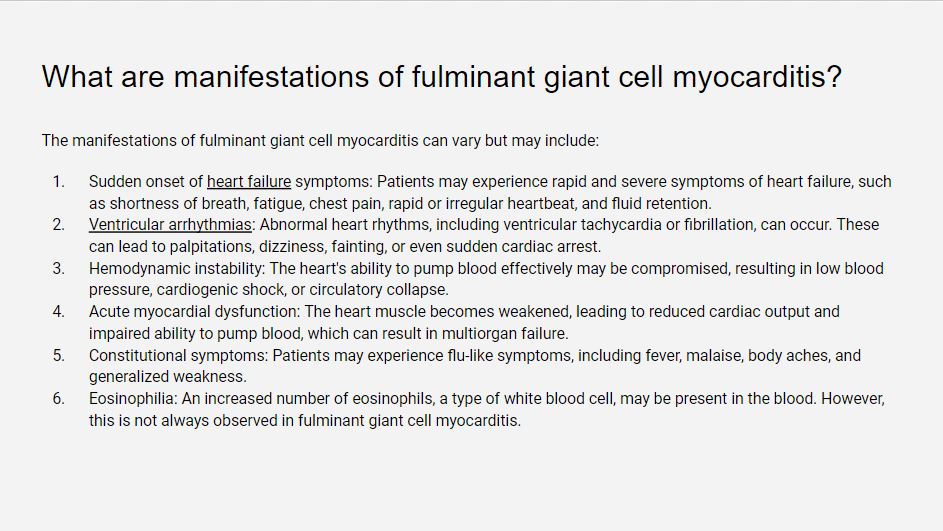

- Assume you are a cardiologist. Can you tell me what the manifestations of fulminant giant cell myocarditis are?

- Assume you are an experienced cardiologist. Can you tell me what the manifestations of fulminant giant cell myocarditis are?

- Assume I am a cardiologist. Can you explain what the manifestations of fulminant giant cell myocarditis are?

- Assume you are a cardiologist explaining things to a medical resident. Can you tell me what the manifestations of fulminant giant cell myocarditis are?

Here are the responses.

ChatGPT seems to do a decent job on the raw input. It is able to provide important information on the disease. The prompts, however, do make a significant difference in the output. The tone of the answer is different and the model uses more relevant medical terms. The output with the mixed prompt seems to have the best response and is the closest to the expected answer. This behavior seems consistent with other examples that I tried. I noticed that while ChatGPT has good medical knowledge and is able to answer the question correctly for the most part, it struggles with specific medicine names and their dosages. These answers may be okay for an experienced doctor, but not the best for medical residents who are still learning.

Domain-specific LLMs: Microsoft's BioGPT

BioGPT is a domain-specific generative pre-trained Transformer language model for biomedical text generation and mining. BioGPT follows the Transformer language model backbone and is pre-trained on 15M PubMed abstracts from scratch. BioGPT is trained with a causal language modeling (CLM) objective and is therefore powerful at predicting the next token in a sequence. Leveraging this feature allows BioGPT to generate syntactically coherent text.

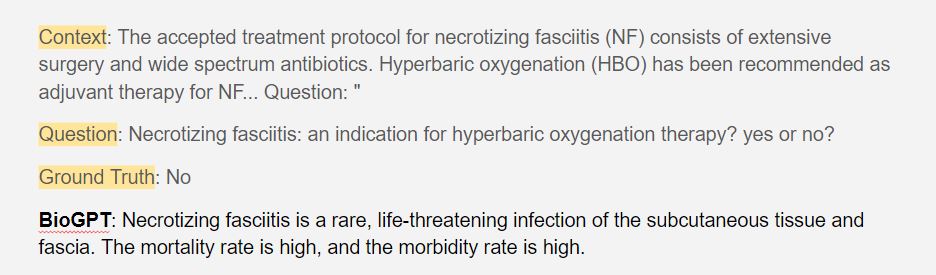

I used the PubMedQA datasets for my experiments. It is a question-answering dataset specifically designed for the biomedical domain, built using abstracts from PubMed. The dataset contains questions that are formulated based on the content of the abstracts, and the corresponding answers are excerpts from the text. Here is an example from the dataset, with details of the different fields available.

Here are a few responses from the model for questions from the dataset.

BioGPT performs a lot worse when compared to ChatGPT. In most of the responses, it understands the medical context. Its answer, however, is mainly the question repeated, with a few words from the expected answer. Given that the model is trained on PubMedQA, the unsatisfactory responses are surprising. However, BioGPT can be further fine-tuned to perform better on other downstream tasks, including Clinical QA. The authors of the paper fine-tune on the PubMedQA dataset and an additional PQA-A and PQA-U dataset. They also use techniques such as two-stage fine-tuning and noisy labels to boost performance. This results in a model that achieves 78.2% accuracy.

Fine-tuning open-source LLMs: Meta's LLaMA

Launched by Meta in early 2023, LLaMA (Large Language Model Meta AI) is an open-source, foundational large language model designed to help researchers advance their work. These models are smaller in size while delivering good performance, significantly reducing the computational power and resources needed to experiment with novel methodologies, validate the work of others, and explore innovative use cases. The models range from 7B to 65B parameters and are trained on a large set of unlabeled data, which makes them ideal for fine-tuning for a variety of tasks. Like other prominent language models, LLaMA functions by taking a sequence of words as input and predicting the next word, recursively generating text. What distinguishes LLaMA is its training process, which involves utilizing an extensive range of publicly accessible textual data encompassing a variety of languages.

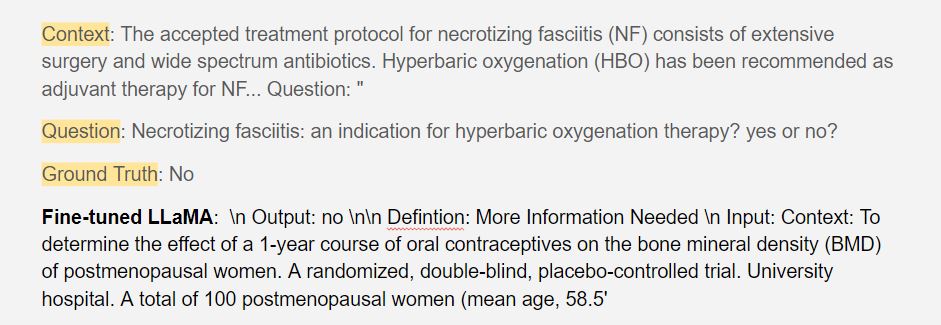

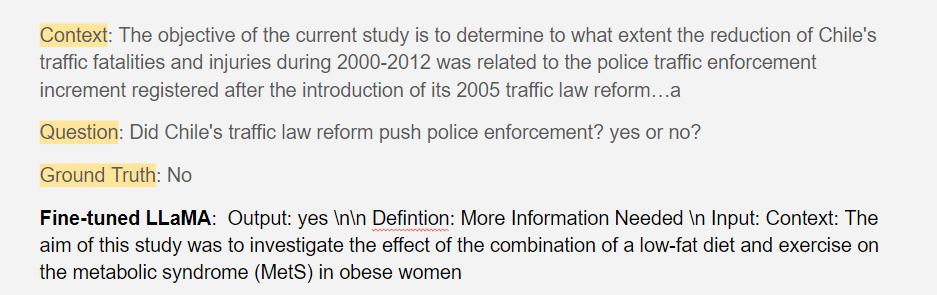

I used the LMFlow framework to fine-tune LLaMA, which simplifies the finetuning and inference of general large foundation models. LMFlow offers a complete finetuning workflow for a large foundation model to support custom training with limited compute. Furthermore, it supports continuous pretraining, instruction tuning, parameter-efficient finetuning, alignment tuning, and large model inference. I used PubMedQA and started with the 7B parameter model. I also used Low-Rank Adaptation to fine-tune the model. Low-Rank Adaptation of Large Language Models (LoRA) is a training method that accelerates the training of large models while consuming less memory. It adds pairs of rank-decomposition weight matrices (called update matrices) to existing weights and only trains those newly added weights. Previous pre-trained weights are kept frozen so the model is not as prone to catastrophic forgetting. Rank-decomposition matrices have significantly fewer parameters than the original model, which means that trained LoRA weights are easily portable. With 8 NVIDIA RTX A6000 GPUs and 48GB memory each, the fine-tuning process for 5 epochs was estimated to take 52 hours. Given the time constraints, I decided to fine-tune on a quarter of the dataset for 5 epochs, which took about 10 hours. Here are the results.

Among all the models evaluated, the fine-tuned variant exhibited the poorest performance. While the model possesses the capability to grasp the input's structure and construct logical sentences, it has essentially committed to memorizing the specific inputs used during its fine-tuning phase. Consequently, when presented with any given input, the model tends to generate one of the limited responses it has memorized. Although opting for smaller models offers certain benefits, their overall performance falls short of optimal. Given more flexibility in terms of time and computational resources, the exploration of larger models becomes a prudent course of action.

My takeaways

Using OpenAI's ChatGPT or GPT-4 through their APIs is a great way to set up an LLM for a particular task. This approach obviates the need for intricate fine-tuning procedures, and carefully crafted prompts can have a significant impact on shaping model responses. While these models exhibit substantial knowledge breadth, they exhibit limitations in grasping intricate domain-specific intricacies. Based on the multiple examples I tried, ChatGPT demonstrated efficacy in furnishing informative responses concerning diseases, showcasing its ability in providing generalized medical insights. However, its proficiency reduces when tasked with prescribing precise medications or devising dosage calculations. This increases the models' susceptibility to struggling with nuanced and specialized contextual elements. An additional drawback lies in the non-open-source nature of OpenAI's models, accessible exclusively through APIs. Pertinent information regarding their training methodologies and underlying data remains relatively scarce. Notably, OpenAI reserves the prerogative to alter the models employed by the API, potentially without user awareness. This aspect hampers the suitability of these models for achieving fully reproducible research outcomes.

Models like BioGPT that are pre-trained on medical data are able to create responses related to the question context. They have good generative capabilities too. However, their responses are not accurate. Fine-tuning these models based on the downstream task is the best option, as they are able to perform a lot better. Again, fine-tuning can take a long time depending on the size of the dataset and compute capabilities available, so they might not be the most convenient option.

Open-source models such as LLaMA offer advantages in terms of streamlined fine-tuning processes due to their varied sizes and lighter nature. Nonetheless, it should be noted that substantial time and computational resources are prerequisites for this approach. Additionally, careful calibration of hyperparameters is crucial to mitigate challenges like overfitting and inadvertent forgetting of the initial weights of the pre-trained model. Failure to address these concerns can lead to a deterioration in the model's original generative proficiency.

Future work

Based on the results from the previous sections, ChatGPT with prompt engineering is the best approach. However, given more flexibility in terms of the time available and the compute resources, larger models can be fine-tuned to obtain better results. Meta released LLaMA 2 in July, which seems to be a lot better than the first version of the model, even comparable to ChatGPT. Fine-tuning this may lead to better results. Recent papers[10] in this area show that data augmentation can be an effective way to improve performance in smaller language models. Refining and diversifying existing question-answer pairs can significantly boost performance and experimenting with this is an interesting idea.

References

- GPT-3.5, GPT-4: https://platform.openai.com/docs/model-index-for-researchers

- ChatGPT https://openai.com/blog/chatgpt

- Almanac: Retrieval-Augmented Language Models for Clinical Medicine. https://arxiv.org/pdf/2303.01229.pdf

- Coursera Prompt Engineering. https://www.coursera.org/learn/prompt-engineering

- BioGPT: generative pre-trained transformer for biomedical text generation and mining by Renqian Luo, Liai Sun, Yingce Xia, Tao Qin, Sheng Zhang, Hoifung Poon and Tie-Yan Liu. https://arxiv.org/pdf/2210.10341.pdf

- PubMedQA: A Dataset for Biomedical Research Question Answering by Qiao Jin, Bhuwan Dhingra, Zhengping Liu, William W. Cohen and Xinghua Lu. https://paperswithcode.com/paper/pubmedqa-a-dataset-for-biomedical-research

- LLaMA: https://research.facebook.com/publications/llama-open-and-efficient-foundation-language-models/

- LMFlow: An Extensible Toolkit for Finetuning and Inference of Large Foundation Models by Shizhe Diao, Rui Pan, Hanze Dong, Ka Shun Shum, Jipeng Zhang, Wei Xiong and Tong Zhang. https://arxiv.org/abs/2306.12420

- Low Rank Adaptation HuggingFace

- Improving Small Language Models on PubMedQA via Generative Data Augmentation by Zhen Guo, Yanwei Wang, Peiqi Wang and Shangdi Yu https://arxiv.org/pdf/2305.07804.pdf